Ahead of Global Accessibility Awareness Day 2025 on May 15, Apple has once more unveiled a slew of new features coming to iOS 19, macOS 16, and visionOS 3.

While Apple never reveals anything else about its next operating systems until each year's WWDC, it now regularly makes an exception for accessibility options. As it did with eye tracking in iOS 18 to let users control their iPhones and Macs, it's now bringing new accessibility features to all of its devices.

"At Apple, accessibility is part of our DNA," Tim Cook said in a statement. "Making technology for everyone is a priority for all of us, and we're proud of the innovations we're sharing this year."

The new features include:

- Accessibility Nutrition Labels on the App Store

- Magnifier for Mac

- Braille Access

- Accessibility Reader

- Live captions on Apple Watch

- Live Recognition in Apple Vision Pro

Accessibility Nutrition Labels

Apple will add a new section to the description of every app in the App Store, adding to the existing details about privacy and in-app purchases. Users will see details of what apps' accessibility features are — such as Voice Control, captions, Reduced Motion and more — before they download.

"Accessibility Nutrition Labels are a huge step forward for accessibility," Eric Bridges, the American Foundation for the Blind's president and CEO, said. "Consumers deserve to know if a product or service will be accessible to them from the very start, and... [these] labels will give people with disabilities a new way to easily make more informed decisions and make purchases with a new level of confidence."

Magnifier for Mac

Magnifier has been an iOS Control Center tool for almost a decade, and now it's coming to the Mac. It's meant for external cameras rather than built-in ones, so that users can point the lens at what they need to see.

Apple says that it works with USB cameras, plus iPhones through Continuity Camera. It can also use Desk View and magnify documents on a user's desk.

Braille Access

Braille Access is a new Braille note-taking feature that lets users write notes, but also perform math with Nemeth Braile. It can also transcribe live conversations into Braille.

And Braille Access is also an app launcher, meaning that users can type in Braille the name of the app they want, and it will open. Plus it supports Braille Ready Format (BRF) files, meaning users can have access to a wide range of existing books and documents.

Accessibility Reader

Then Accessibility Reader is a new reading mode that works across the Mac, iPhone, iPad, and Apple Vision Pro. It can be launched from any app, and gives the user new ways to focus on what they need to read, plus adjust font, color, and spacing.

Accessibility Reader gives more options for customizing fonts, colors, and sizes — image credit: Apple

Accessibility Reader gives more options for customizing fonts, colors, and sizes — image credit: AppleAs well as being accessible within any app, Accessibility Reader is also built into the Magnifier app. So on iPhone, iPad, and now also Mac, users can use the combination of Magnifier and Accessibility Reader to read books and restaurant menus.

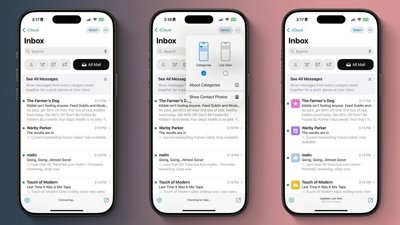

Live Captions on Apple Watch

Next, Live Captions come to Apple Watch, along with Live Listen controls. When audio is playing on the iPhone, Apple Watch will display the Live Captions — and it will also act as a remote control.

Apple says that Live Listen, along with the new Live Captions, can be used alongside the recently launched hearing features in AirPods Pro 2.

Live Recognition in Apple Vision Pro

As part of a series of accessibility features for the Apple Vision Pro, visionOS is going to include an update to the headset's zoom feature. Matching the new Magnifier for the Mac, the Apple Vision Pro zoom feature will let users magnify everything in view, whether that's virtual content or their surroundings.

Apple Vision Pro is also gaining Live Recognition, the ability to use VoiceOver to describe objects in front of the user. Apple also says that there will be an accessibility API that will let developers offer personal assistance, through app such as Be My Eyes.

Updates to existing accessibility features

Alongside the entirely new features coming soon, Apple has revealed that it will also be improving its existing options. Background Sounds, for instance, helps both focus and relaxation, and will now feature new EQ settings for customization.

Then Personal Voice is claimed to be faster and easier to use. It will now also support Spanish.

The Vehicle Motion Cues feature that was introduced with iOS 18 to help reduce motion sickness, has also been updated. It will add new ways for customizing the animated onscreen dots that it can display on iPhone, iPad, and Mac.

Notice the iPhone to the far left: it's feeding a magnified view to the Mac, which is also displaying larger, more visible text — image credit: Apple

Notice the iPhone to the far left: it's feeding a magnified view to the Mac, which is also displaying larger, more visible text — image credit: AppleApple says that it is also updating:

- Eye Tracking on iPhone and iPad

- Head Tracking to control devices with head movements

- Switch Control for Brain Computer Interfaces

- Assistive Access for the Apple TV app

- Customizable Music Haptics on iPhone

- Sound Recognition gains Name Recognition

- Voice Control for Xcode

- New languages in Live Captions

- Large Text in CarPlay

Then alongside all of these new and updated features, Apple says it is adding an option called Share Accessibility Settings. It's intended so that users can quickly share their settings when borrowing someone else's device.

Accessibility at Apple

"Building on 40 years of accessibility innovation at Apple, we are dedicated to pushing forward with new accessibility features for all of our products," Sarah Herrlinger, Apple's senior director of Global Accessibility Policy and Initiatives said. "Powered by the Apple ecosystem, these features work seamlessly together to bring users new ways to engage with the things they care about most."

Apple has not specified when any of these new features will be released, but it will be with iOS 19 and the latest version of macOS. In previous years, many of the newly revealed accessibility features have rolled out by the end of the year they were announced in.

William Gallagher

William Gallagher

-m.jpg)

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

2 Comments

I’d love the ability to disable long press on the locked screen of my iPhone. Same with Apple Watch for changing the faces. It’s beyond annoying having to pay attention to how I hold my phone or what touches my watch.

I agree there should be an option to disable long press or change the length of time required to invoke it, but I never invoke it unintentionally and use it on purpose multiple times per day, so I don’t agree that “internal metrics make Apple think how everybody loves these features while people just get annoyed by all these accidents”. People are different; what is annoying to you is really helpful for me, and it’s good to have options